|

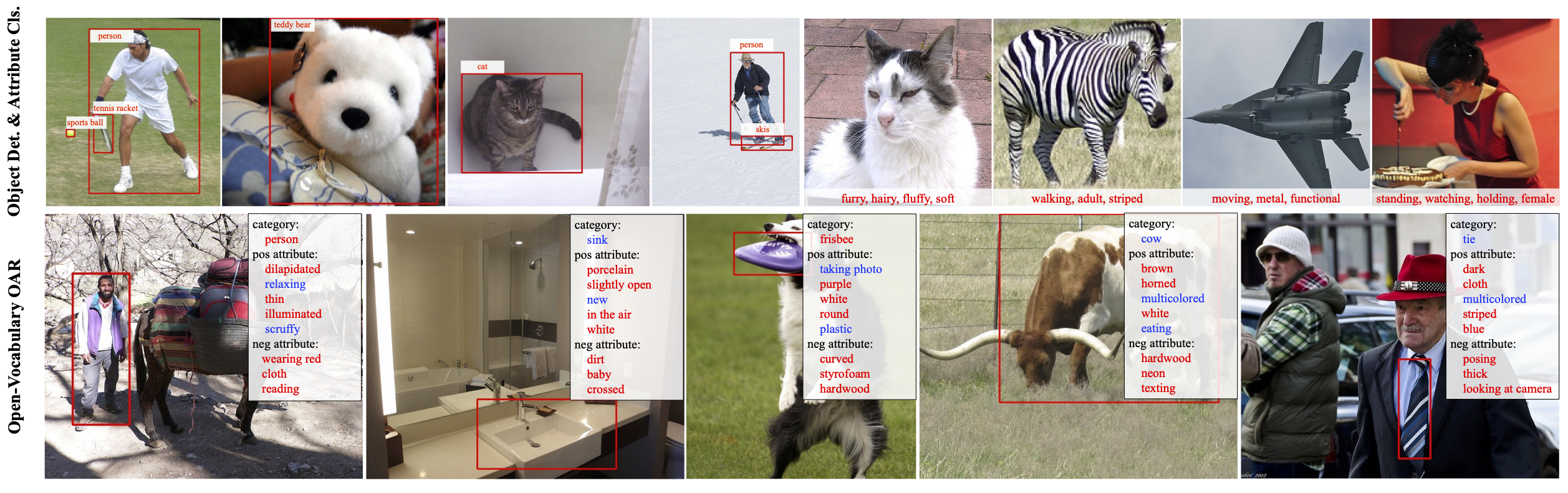

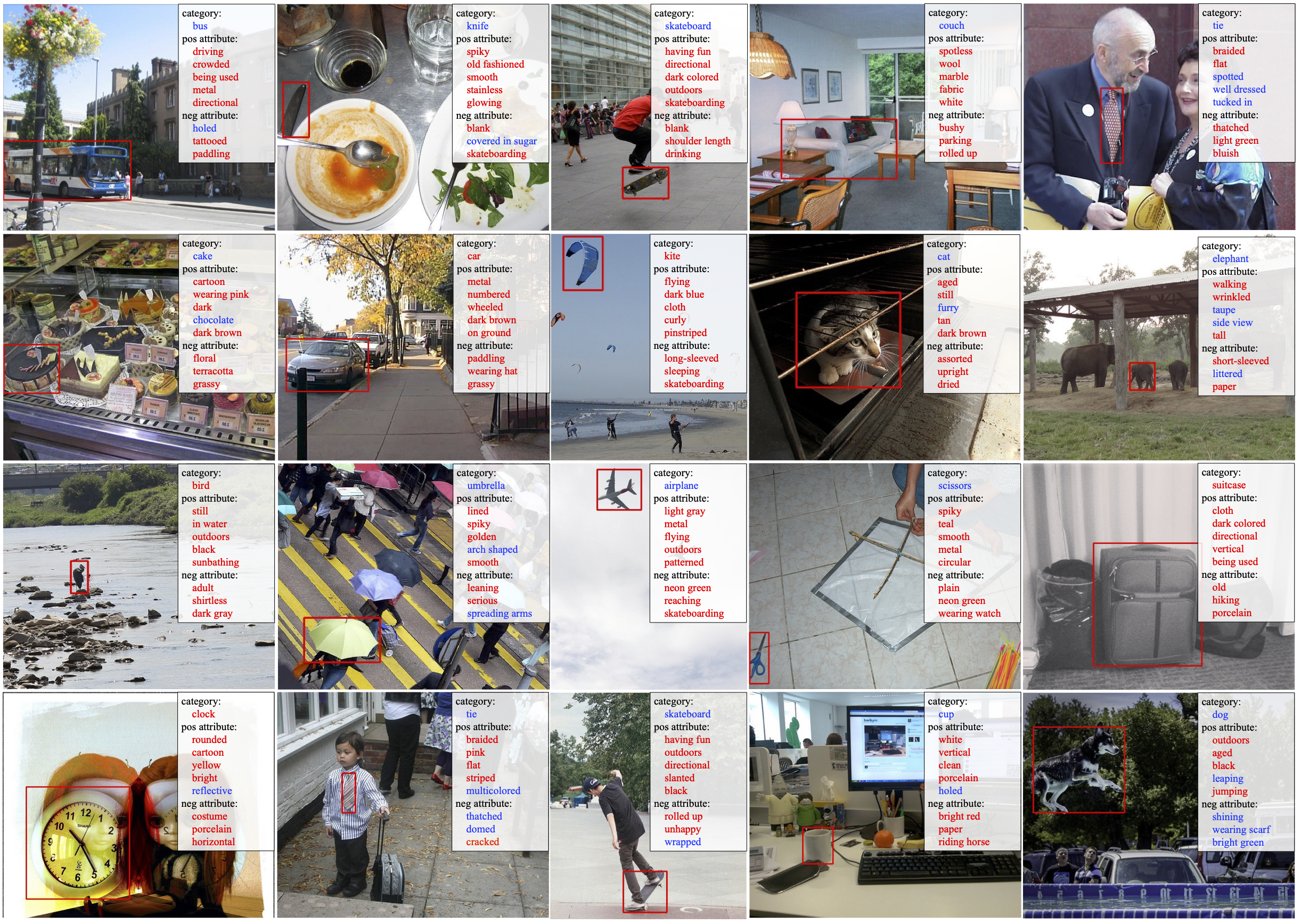

In this paper, we consider the problem of simultaneously detecting objects and inferring their visual attributes in an image, even for those with no manual annotations provided at the training stage, resembling an open-vocabulary scenario.

To achieve this goal, we make the following contributions:

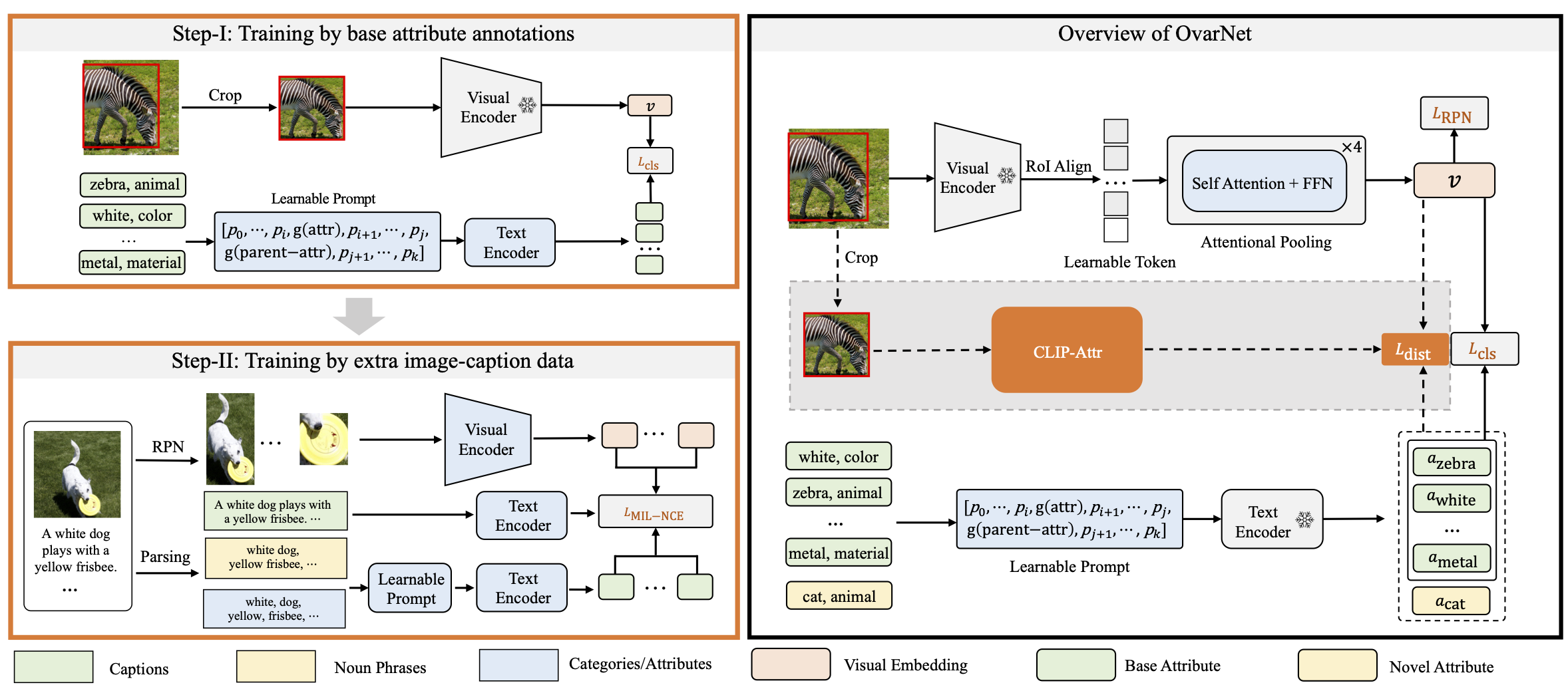

(i) we start with a naive two-stage approach for open-vocabulary object detection and attribute classification, termed CLIP-Attr. The candidate objects are first proposed with an offline RPN and later classified for semantic category and attributes;

(ii) we combine all available datasets and train with a federated strategy to finetune the CLIP model, aligning the visual representation with attributes,

additionally, we investigate the efficacy of leveraging freely available online image-caption pairs under weakly supervised learning;

(iii) in pursuit of efficiency, we train a Faster-RCNN type model end-to-end with knowledge distillation, that performs class-agnostic object proposals and classification on semantic categories and attributes with classifiers generated from a text encoder;

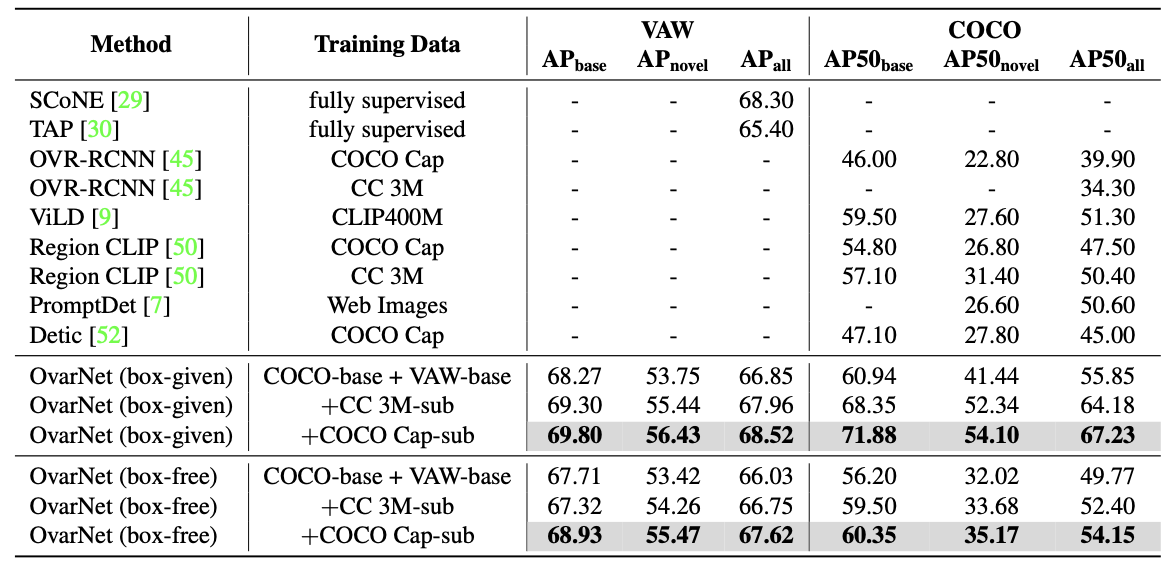

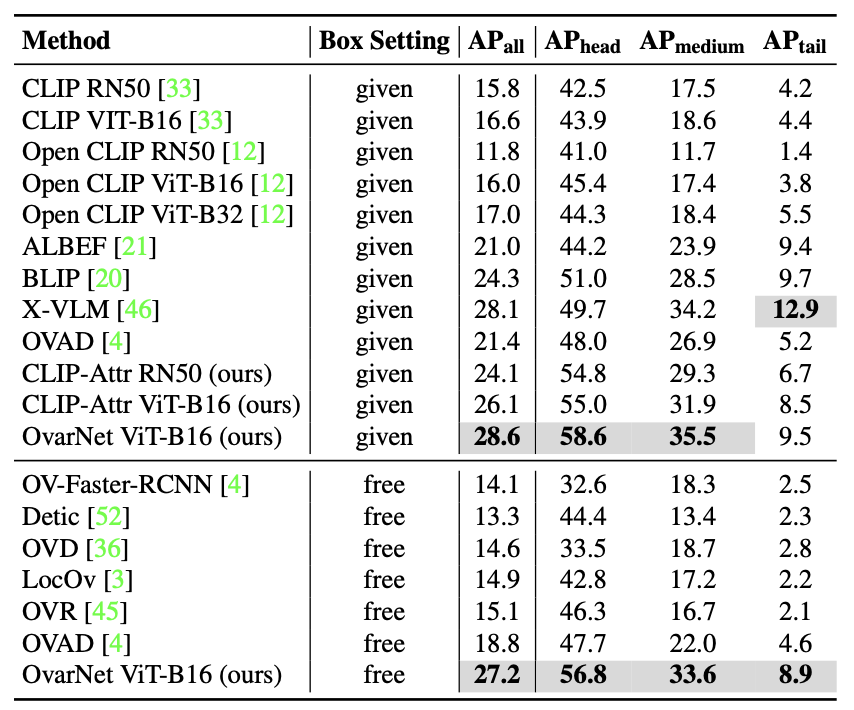

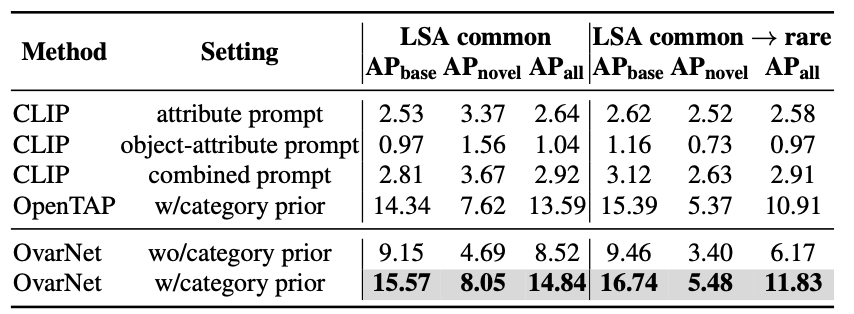

Finally, (iv) we conduct extensive experiments on VAW, MS-COCO, LSA, and OVAD datasets,

and show that recognition of semantic category and attributes is complementary for visual scene understanding, i.e., jointly training object detection and attributes prediction largely outperform existing approaches that treat the two tasks independently,

demonstrating strong generalization ability to novel attributes and categories.

|